Engineering

Simplify Distributed Storage with GlusterFS: A Quick Guide

Learn how to set up distributed storage with GlusterFS for scalable, reliable, and efficient data management

Published on:

Mar 2, 2015Last updated on:

Feb 27, 2025Persistent Storage Requirement

A common issue with cloud applications is the requirement for persistent storage. If you’re spinning up instances all across your datacentre, you have no idea which physical host will end up serving your applications. This points to the need for some sort of networked file system. If you’ve ever used a Linux-based network, you’ve probably heard of NFS. But NFS is a poor choice for distributed / concurrent systems, and as that is what cloud computing is all about, we will need a better solution.

GlusterFS Overview

GlusterFS is an “open source, distributed file system designed for massive scale.” However, this is slightly misleading. Gluster isn’t really a filesystem, but it collates several file systems so that data is distributed across multiple hosts. The underlying filesystem is usually XFS (recommended by the GlusterFS developers) but ZFS or plain ext4 are often used.

So, why use GlusterFS for your storage needs? I’ll outline a few of the features and you can decide for yourself.

GlusterFS is:

- Designed for commodity hardware: You can spread your data across redundant servers and if one goes down, it should be transparent to clients. You can add or remove disks on the fly.

- Accessible: Gluster exports its files over NFS and CIFS(smb) if you cannot use the gluster native clients.

- Easy to set up: the only requirement for GlusterFS is a kernel that supports FUSE (2.6.14 or later.)

GlusterFS Guide

Note: before starting, ensure each node’s hostname resolves correctly to the address of the host, and that times are

synchronised using NTP.

For a quick replicated GlusterFS setup, on a couple of Ubuntu hosts, run the following commands:

sudo fallocate -l 15G /brick

sudo add-apt-repository ppa:semiosis/ubuntu-glusterfs-3.5

sudo apt-get update

sudo apt-get -y install glusterfs-server xfsprogs

sudo mkfs.xfs -i size=512 /brick

sudo mkdir -p /export/brick

sudo mount /brick /export/brick

sudo mkdir -p /export/brick/volume

sudo sh -c "echo \"/brick /export/brick xfs defaults 0 0\" \

>> /etc/fstab

Then, on one of the hosts, run the following:

sudo gluster peer probe [address.of.host2]

sudo gluster volume create gv0 replica 2 \

[address.of.host1]:/export/brick/volume \

[address.of.host2]:/export/brick/volume

sudo gluster volume info

sudo gluster volume set gv0 auth.allow \

[client1.address,client2.address,...,clientn.address]

sudo gluster volume start gv0

Then on a client:

sudo add-apt-repository ppa:semiosis/ubuntu-glusterfs-3.5

sudo apt-get update

sudo apt-get -y install glusterfs-client

sudo mkdir -p /mnt/volume

sudo mount -t glusterfs [address.of.host]:/gv0 /mnt/volume

sudo chmod a+w /mnt/volume

echo "Hello from $HOSTNAME" > /mnt/volume/test.txt

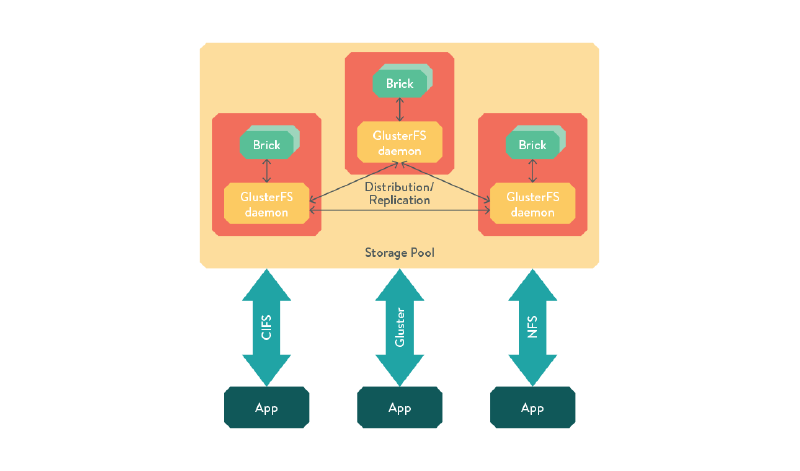

GlusterFS Architecture Overview

A quick overview of GlusterFS concepts:

- Trusted pool - all the hosts in a given cluster.

- Node - any server in the trusted pool, interchangeable with host/server.

- Brick - any filesystem, preferably a physical disk formatted with XFS.

- Export - the mount path of the brick(s) on a server

- Subvolume - a brick after being processed by at least one translator.

- Volume - the final share after passing through all the trainslators.

- Translator - takes a subvolume or brick, does something with them and offers a subvolume connection.

Figure - GlusterFS Architecture

GlusterFS Engineer Summary

GlusterFS takes your bricks, and passes them through several translators before exposing them as volumes. In a multi-host setup, the “cluster” translator is responsible for distribution / replication.